How to Run AI Models Locally on Windows With Ollama

You may run AI models like ChatGPT locally on your computer without an internet connection. Plenty of services help you with this process. However, we will demonstrate it using Ollama.

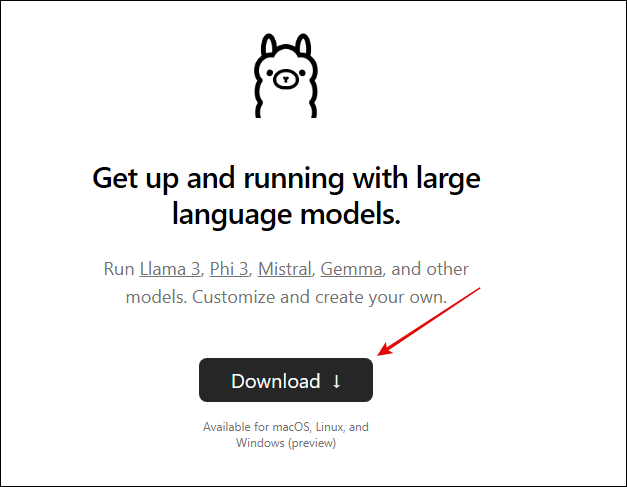

Download and Install Ollama

Step 1: Navigate to the Ollama website and click the Download button.

Step 2: Select your OS and click Download.

Step 3: Double-click the downloaded file > click Install > follow the installation instructions.

Once installed, you should see a pop-up saying Ollama is running.

Step 4: Launch your terminal. In Windows, press Windows + R, type cmd, and hit Enter.

Step 5: Download your first AI model using the command below. Ensure you replace model with an actual model on Ollama; these could be Llama 3, Phi 3, Mistral, Gemma, etc. Be patient; this may take a while.

Ollama pull model

How to Communicate With Downloaded Models on Ollama

Now that you’ve installed the AI model, you can communicate with it. This is similar to communicating with Gemini or ChatGPT AIs from the web interfaces. Follow the steps below.

Step 1: Launch Terminal on your computer.

Step 2: Type the command below. You may substitute llama3 in the command for the name of the model you downloaded.

Ollama run llama3

Step 3: Finally, type your prompt and hit Enter as you would on ChatGPT or Gemini. You should then be able to interact with the AI.

FAQ

Can You Run Generative AI Locally?

Yes, you can, and currently, Stable Diffusion is the most reliable means. Tools like Stable Diffusion WebUI and InvokeAI give access to Stable Diffusion for local image generation.

Disclaimer: Some pages on this site may include an affiliate link. This does not effect our editorial in any way.